Popularity of mobile applications continues to grow. So does OAuth 2.0 protocol on mobile apps. It's not enough to implement standard as is to make OAuth 2.0 protocol secure there. One needs to consider the specifics of mobile applications and apply some additional security mechanisms.

In this article, I want to share the concepts of mobile OAuth 2.0 attacks and security mechanisms used to prevent such issues. Described concepts are not new but there is a lack of the structured information on this topic. The main aim of the article is to fill this gap.

OAuth 2.0 nature and purpose

OAuth 2.0 is an authorization protocol that describes a way for a client service to gain a secure access to the user’s resources on a service provider. Thanks to OAuth 2.0, the user doesn’t need to enter his password outside the service provider: the whole process is reduced to clicking on «I agree to provide access to…» button.

A provider is a service that owns the user data and, by permission of the user, provides third party services (clients) with a secure access to this data. A client is an application that wants to get the user data stored by the provider.

Soon after OAuth 2.0 protocol was released, it was adapted for authentication, even though, it wasn’t meant for that. Using OAuth 2.0 for authentication shifts an attack vector from the data stored at the service provider to the client service user accounts.

But authentication was just a beginning. In times of mobile apps and conversion glorification, accessing an app with just one button sounded nice. Developers adapted OAuth 2.0 for mobile use. Of course, not many worried about mobile apps security and specifics: zap and into the production they went! Then again, OAuth 2.0 doesn’t work well outside of web applications: there are the same problems in both mobile and desktop apps.

So, let’s figure out how to make mobile OAuth 2.0 secure.

How does it work?

There are two major mobile OAuth 2.0 security issues:

- Untrusted client. Some mobile applications doesn’t have a backend for OAuth 2.0, so the client part of the protocol flow goes on the mobile device.

- Redirections from a browser to a mobile app behave differently depending on the system settings, the order in which applications installed and other magic.

Let’s look in-depth at these issues.

Mobile application is a public client

To understand the roots and consequences of the first issue, let’s see how OAuth 2.0 works in case of server-to-server interaction and then compare it with OAuth 2.0 in case of client-to-server interaction.

In both cases, it all starts with the client service registers on the provider service and receives

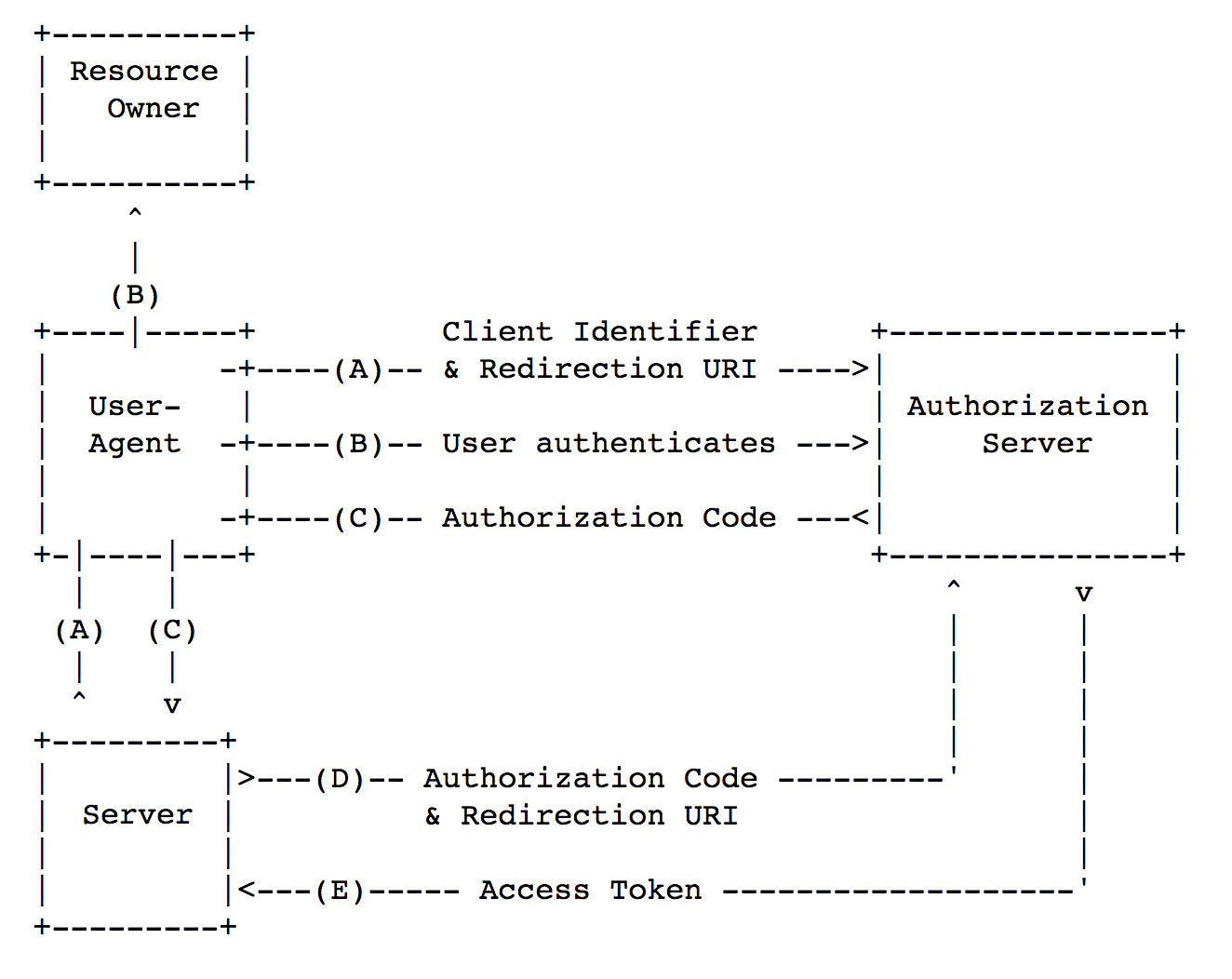

client_id and,in some cases, client_secret. client_id is a public value, and it’s required for the client service identification as opposed to client_secret value, which is private. You can read more about the registration process in RFC 7591.The scheme below shows the way OAuth 2.0 operates in case of server-to-server interaction.

Picture origin: https://tools.ietf.org/html/rfc6749#section-1.2

OAuth 2.0 protocol can be divided into three main steps:

- [steps A-C] Receive an

authorization_code(hereinafter,code).

- [steps D-E] Exchange

codetoaccess_token.

- Get resource via

access_token.

Let’s elaborate on the process of getting

code value:- [Step A] Client redirects the user to the service provider.

- [Step B] Service provider requests permission from the user to provide the client with the data (arrow B up). The user provides data access (arrow B to the right).

- [Step C] Service provider returns

codeto the user browser which redirectscodeto the client.

Let’s talk more the process of getting

access_token:- [Step D] Client server sends a request for

access_token.Code,client_secretandredirect_uriare included in the request.

- [Step E] In case of valid

code,client_secretandredirect_uri,access_tokenis provided.

Request for

access_token is done according to the server-to-server scheme: therefore, in general, attacker have to hack the client service server or service provider server in order to steal access_token.Now let’s look at mobile OAuth 2.0 scheme without backend (client-to-server interaction).

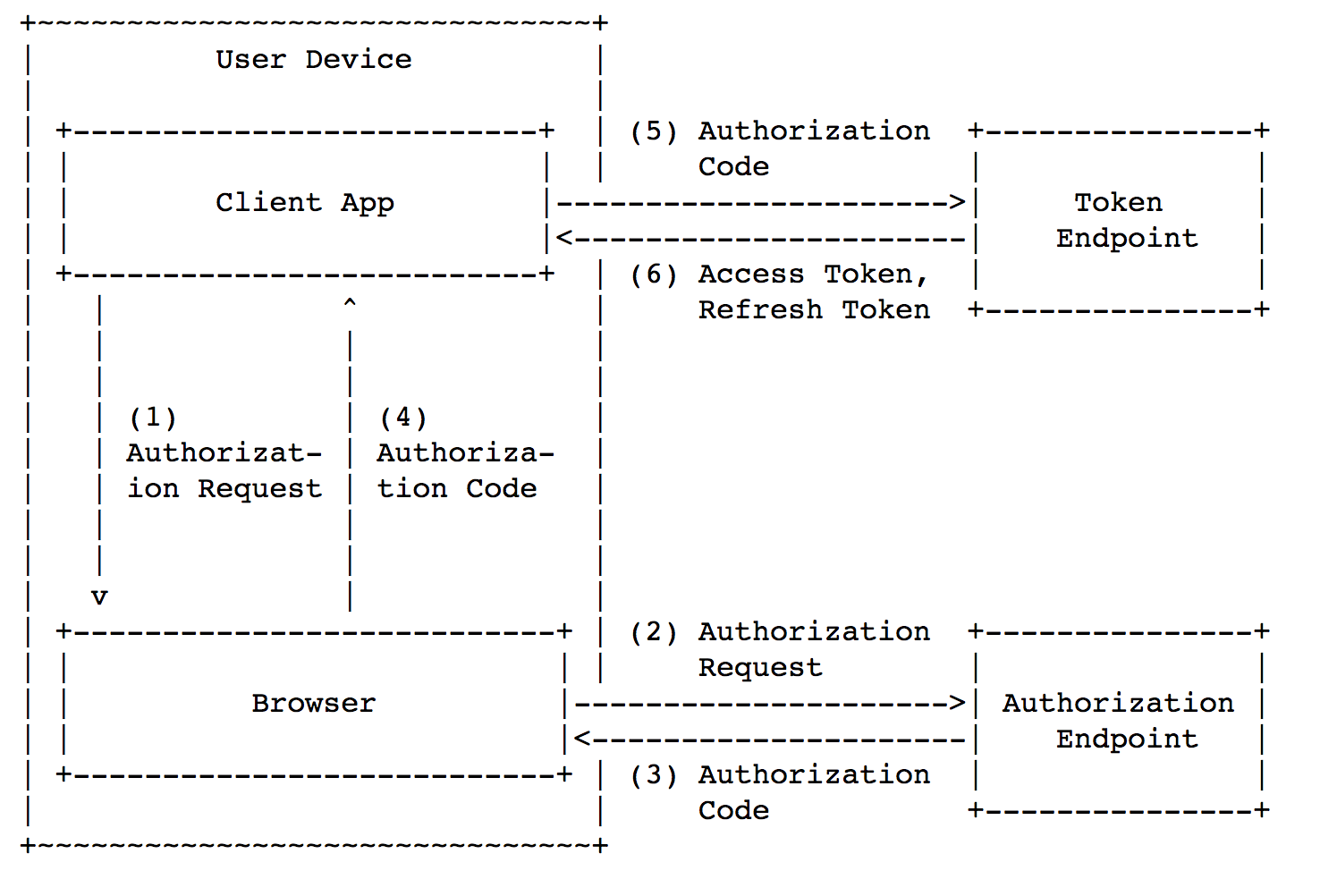

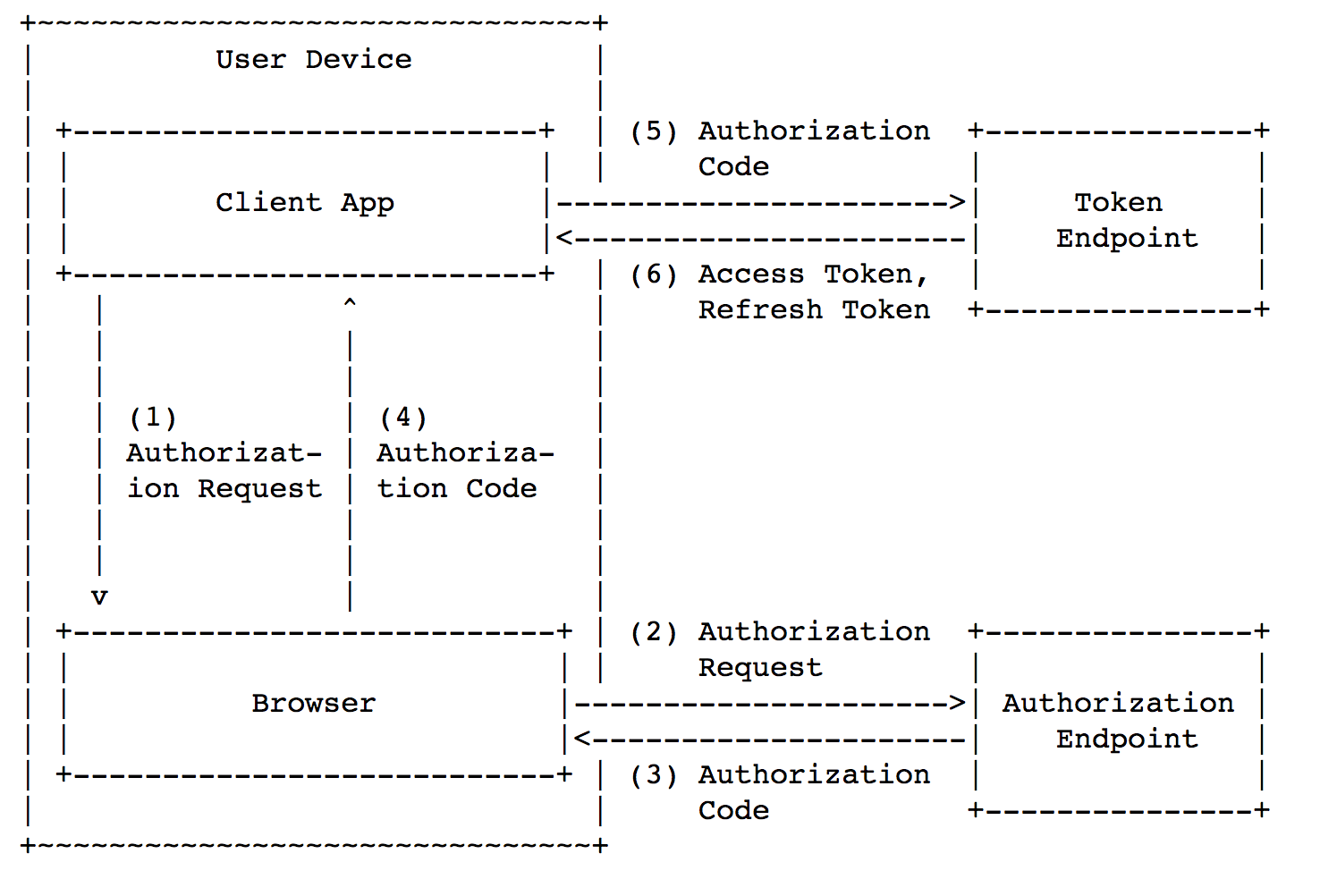

Picture origin: https://tools.ietf.org/html/rfc8252#section-4.1

The main scheme is divided into the same main steps:

- [steps 1-4 in the picture] Get

code. - [steps 5-6 in the picture] Exchange

codetoaccess_token - Gain resource access via

access_token

However, in this case mobile app has also the server functions; therefore,

client_secret would be embedded into the application. As a result, client_secret cannot be kept hidden from attacker on mobile devices. Embedded client_secret can be extracted in two ways: by analyze the application-to-server traffic or by reverse engineering. Both can be easily realized, and that’s why client_secret is useless on mobile devices.You might ask: «Why don’t we get

access_token right away?» You might think that this extra step is unnecessary. Furthermore there’s Implicit Grant scheme that allows a client to receive access_token right away. Even though, it can be used in some cases, Implicit Grant wouldn’t work for secure mobile OAuth 2.0.Redirection on mobile devices

In general, Custom URI Scheme and AppLink mechanisms are used for browser-to-app redirect. Neither of these mechanisms can be as secure as browser redirects on its own.

Custom URI Scheme (or deep link) is used in the following way: a developer determines an application scheme before deployment. The scheme can be any, and one device can have several applications with the same scheme.

It makes things easier when every scheme on a device corresponds with one application. But what if two applications register the same scheme on one device? How does the operation system decide which app to open when contacted via Custom URI Scheme? Android will show a window with a choice of an app and a link to follow. iOS doesn’t have a procedure for this and, therefore, either application may be opened. Anyways, attacker gets a chance to intercept code or access_token.

Unlike Custom URI Scheme, AppLink guarantees to open the right application, but this mechanism has several flaws:

- Every service client must undergo the verification procedure.

- Android users can turn AppLink off for a specific app in settings.

- Android versions older than 6.0 and iOS versions older than 9.0 don’t support AppLink.

All these AppLink flaws increase the learning curve for potential service clients and may result in user OAuth 2.0 failure under some circumstances. That’s why many developers don’t choose AppLink mechanism as substitution for browser redirect in OAuth 2.0 protocol.

OK, what is there to attack?

Mobile OAuth 2.0 problems have created some specific attacks. Let’s see what they are and how they work.

Authorization Code Interception Attack

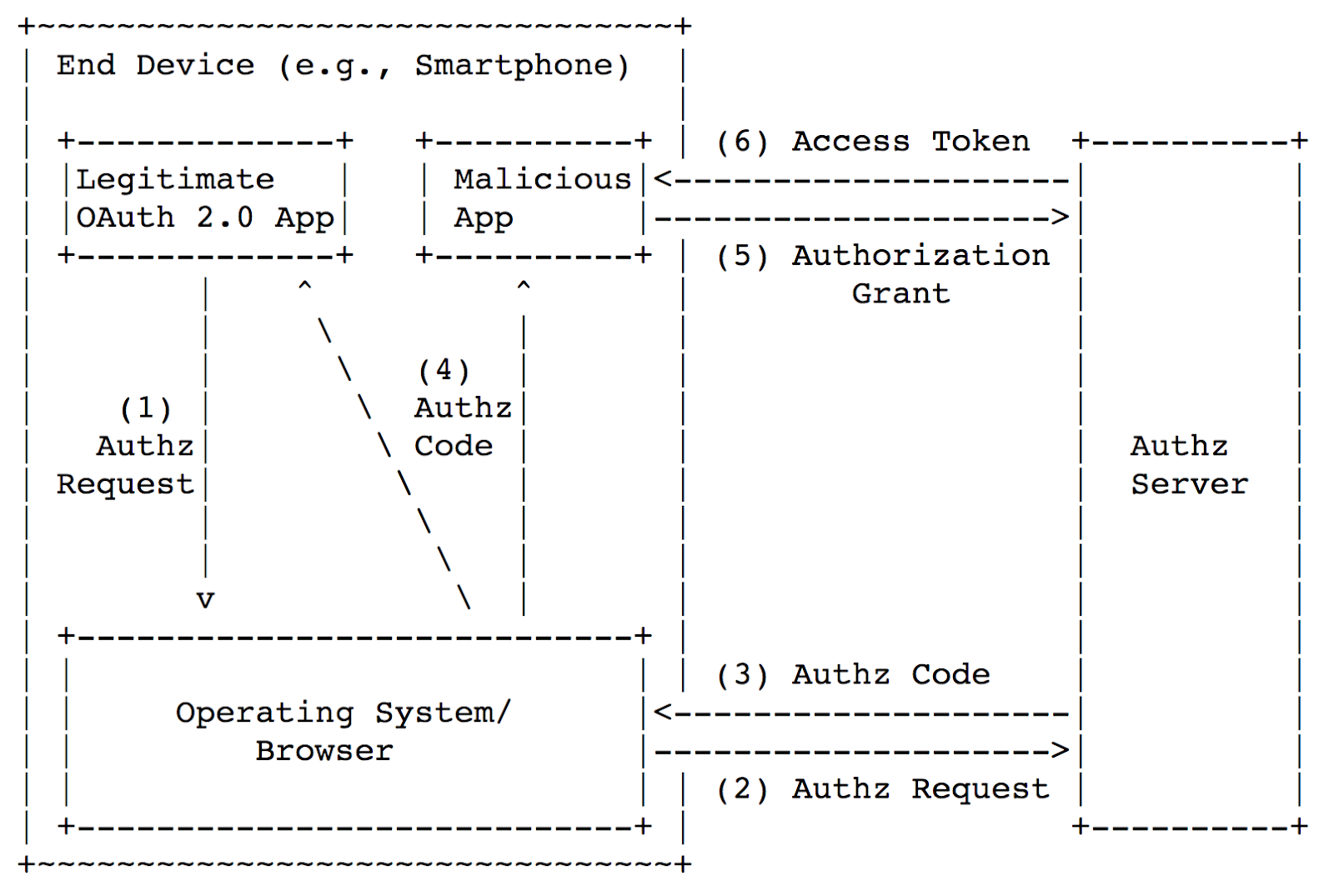

Let’s consider the situation where user device has a legitimate application (OAuth 2.0 client) and a malicious application which registered the same scheme as the legitimate one. The picture below shows the attack scheme.

Picture origin https://tools.ietf.org/html/rfc7636#section-1

Here’s the problem: at the fourth step, the browser returns the

code in the application via Custom URI Scheme and, therefore, the code can be intercepted by a malicious app (since it’s registered the same scheme as a legitimate app). Then the malicious app changes code to access_token and receives access to the user’s data.What’s the protection? In some cases, you can use inter-process communication; we'll talk about it later. In general, you need a scheme called Proof Key for Code Exchange. It’s described in the scheme below.

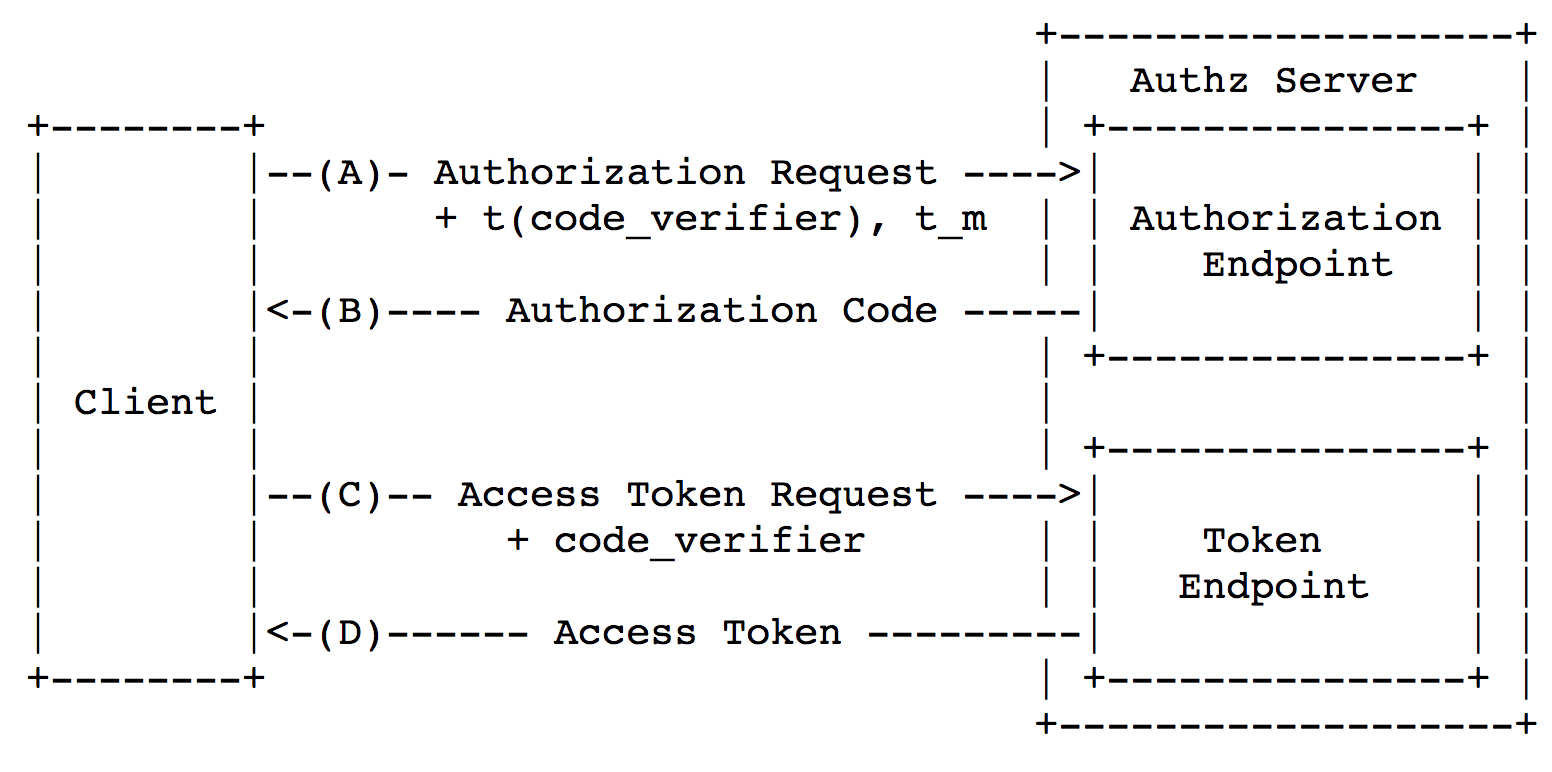

Picture origin: https://tools.ietf.org/html/rfc7636#section-1.1

Client request has several extra parameters:

code_verifier, code_challenge (in the scheme t(code_verifier)) and code_challenge_method (in the scheme t_m).Code_verifier — is a random number with a minimum length of 256 bit, that is used only once. So, a client must generate a new code_verifier for every code request.Code_challenge_method — this is a name of a conversion function, mostly SHA-256.Code_challenge — is code_verifier to which code_challenge_method conversion was applied to and which coded in URL Safe Base64.Conversion of

code_verifier into code_challenge is necessary to rebuff the attack vectors based on code_verifier interception (for example, from the device system logs) when requesting code.In case when a user device doesn’t support SHA-256, a

client is allowed to use plain conversion of code_verifier. In all other cases, SHA-256 must be used.This is how this scheme works:

- Client generates

code_verifierand memorizes it.

- Client chooses

code_challenge_methodand receivescode_challengefromcode_verifier.

- [Step А] Client requests

code, withcode_challengeandcode_challenge_methodadded to the request.

- [Step B] Provider stores

code_challengeandcode_challenge_methodon the server and returnscodeto a client.

- [Step C] Client requests

access_token, withcode_verifieradded to it.

- Provider receives

code_challengefrom incomingcode_verifier, and then compares it tocode_challenge, that it saved.

- [Step D] If the values match, the provider gives client

access_token.

To understand why

code_challenge prevents code interception let’s see how the protocol flow looks from attacker’s perspective.- First, legitimate app requests

code(code_challengeandcode_challenge_methodare sent together with the request).

- Malicious app intercepts

code(but notcode_challenge, since code_challengeis not in the response).

- Malicious app requests

access_token(with validcode, but without validcode_verifier).

- The server notices mismatch of

code_challengeand raises an error message.

Note that the attacker can’t guess

code_verifier (random 256 bit value!) or find it somewhere in the logs (since first request actually transmitted code_challenge).So,

code_challenge answers the question of the service provider: «Is access_token requested by the same app client that requested code or a different one?».OAuth 2.0 CSRF

OAuth 2.0 CSRF is relatively harmless when OAuth 2.0 is used for authorization. It’s a completely different story when OAuth 2.0 is used for authentication. In this case OAuth 2.0 CSRF often leads to account takeover.

Let’s talk more about the CSRF attack conformably to OAuth 2.0 through the example of taxi app client and provider.com provider. First, an attacker on his own device logs into

attacker@provider.com account and receives code for taxi. Then he interrupts OAuth 2.0 process and generates a link:com.taxi.app://oauth?

code=b57b236c9bcd2a61fcd627b69ae2d7a6eb5bc13f2dc25311348ee08df43bc0c4Then the attacker sends this link to his victim, for example, in the form of a mail or text message from taxi stuff. The victim clicks the link, taxi app opens and receives

access_token. As a result, they find themselves in the attacker’s taxi account. Unaware of that, the victim uses this account: make trips, enters personal data, etc. Now the attacker can log into the victim’s taxi account any time, as it’s linked to

attacker@provider.com. CSRF login attack allowed the violator to steal an account. CSRF attacks are usually rebuffed with a CSRF token (it’s also called

state), and OAuth 2.0 is no exception. How to use the CSRF token:- Client application generates and saves CSRF token on a client’s mobile device.

- Client application includes the CSRF token in

codeaccess request.

- Server returns the same CSRF token with

codein its response.

- Client application compares the incoming and saved CSRF tokens. If their values match, the process goes on.

CSRF token requirements: nonce must be at least 256 bit and received from a good source of pseudo-random sequences.

In a nutshell, CSRF token allows an application client to answer the following question: «Was that me who initiated

access_token request or someone is trying to trick me?». Hardcoded client secret

Mobile applications without a backend sometimes store hardcoded

client_id and client_secret values. Of course they can be easily extracted by reverse engineering app.Impact of exposing

client_id and client_secret highly depends on how much trust service provider puts on the certain client_id, client_secret pair. One uses them just to distinguish one client from another while others open hidden API endpoints or make a softer rate limits for some clients.The article Why OAuth API Keys and Secrets Aren't Safe in Mobile Apps elaborates more on this topic.

Malicious app acting as a legitimate client

Some malicious apps can imitate the legitimate apps and display a consent screen on their behalf (a consent screen is a screen where a user sees: «I agree to provide access to…»). User might click «allow» and provide the malicious app with his data.

Android and iOS provide the mechanisms of applications cross-check. An application provider can make sure that a client application is legitimate and vice versa.

Unfortunately, if OAuth 2.0 mechanism uses a thread via browser, it’s impossible to defend against this attack.

Other attacks

We took a closer look at the attacks exclusive to mobile OAuth 2.0. However, let’s not forget about the original OAuth 2.0:

redirect_uri substitution, traffic interception via unsecure connection, etc. You can read more about it here. How to do it securely?

We’ve learned how OAuth 2.0 protocol works and what vulnerabilities it has on mobile devices. Now let’s put the separate pieces together to have a secure mobile OAuth 2.0 scheme.

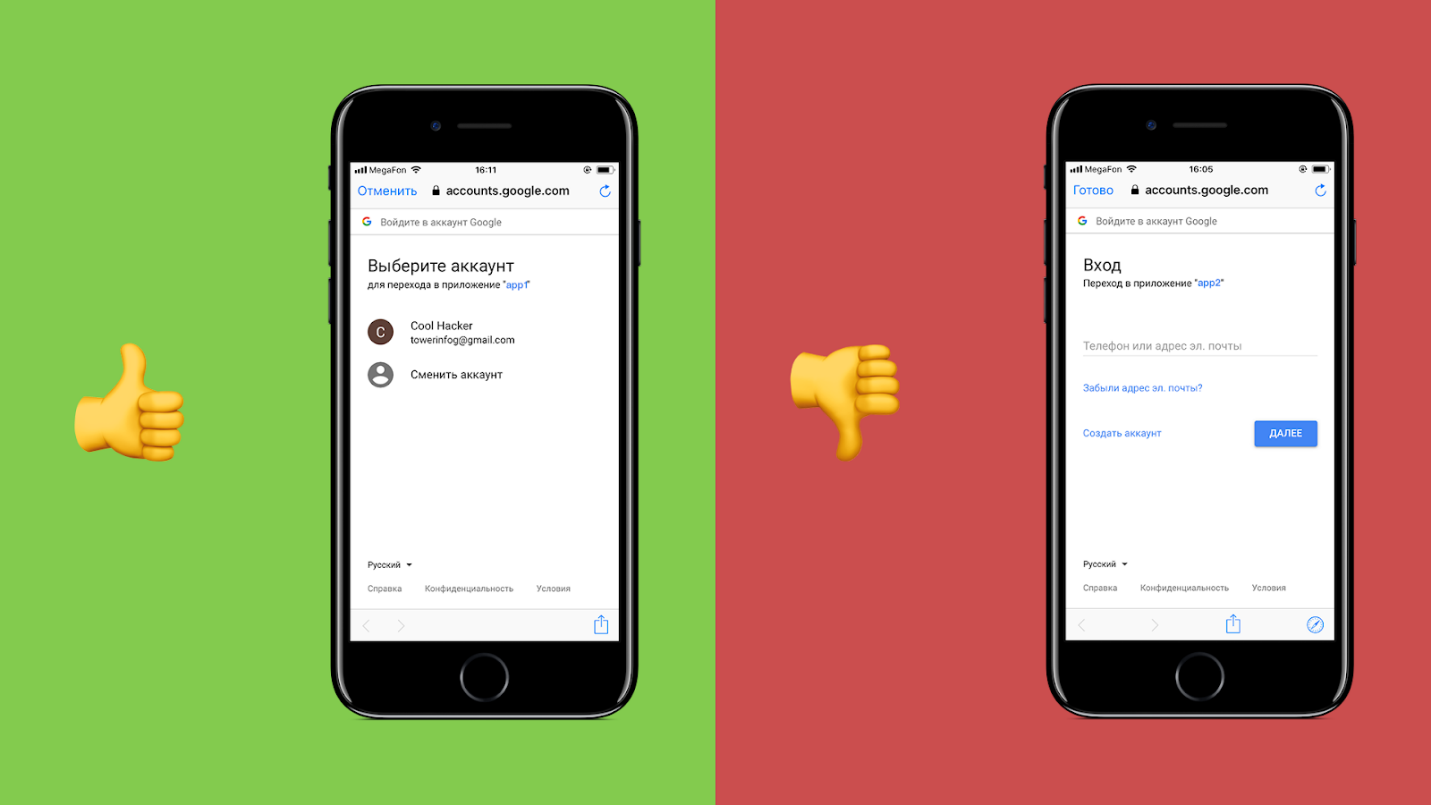

Good, bad OAuth 2.0

Let’s start with the right way to use consent screen. Mobile devices have two ways of opening a web page in a mobile application.

The first way is via Browser Custom Tab (on the left in the picture). Note: Browser Custom Tab for Android is called Chrome Custom Tab, and for iOS – SafariViewController. It’s just a browser tab displayed in the app: there’s no visual switching between the applications.

The second way is via WebView (on the right in the picture) and I consider it bad in respect to the mobile OAuth 2.0.

WebView is a embedded browser for a mobile app.

"Embedded browser" means that access to cookies, storage, cache, history, and other Safari and Chrome data is forbidden for WebView. The reverse is also correct: Safari and Chrome cannot get access to WebView data.

"Mobile app browser" means that a mobile app that runs WebView has full access to cookies, storage, cache, history and other WebView data.

Now, imagine: a user clicks «enter with…» and a WebView of a malicious app requests his login and password from the service provider.

Epic fail:

- The user enters his login and password for the service provider account in the app, that can easily steal this data.

- OAuth 2.0 was initially developed to not to enter the service provider login and password.

The user gets used to entering his login and password anywhere thus increasing a fishing possibility.

Considering all the cons of WebView, an obvious conclusion offers itself: use Browser Custom Tab for consent screen.

If anyone has arguments in favor of WebView instead of Browser Custom Tab, I’d appreciate if you write about it in the comments.

Secure mobile OAuth 2.0 scheme

We’re going to use Authorization Code Grant scheme, since it allows us to add

code_challenge as well as state and defend against a code interception attack and OAuth 2.0 CSRF.

Picture origin: https://tools.ietf.org/html/rfc8252#section-4.1

Code access request (steps 1-2) will look as follows:

https://o2.mail.ru/code?

redirect_uri=com.mail.cloud.app%3A%2F%2Foauth&

state=927489cb2fcdb32e302713f6a720397868b71dd2128c734181983f367d622c24& code_challenge=ZjYxNzQ4ZjI4YjdkNWRmZjg4MWQ1N2FkZjQzNGVkODE1YTRhNjViNjJjMGY5MGJjNzdiOGEzMDU2ZjE3NGFiYw%3D%3D&

code_challenge_method=S256&

scope=email%2Cid&

response_type=code&

client_id=984a644ec3b56d32b0404777e1eb73390cAt step 3, the browser gets a response with redirect:

com.mail.cloud.app://oаuth?

code=b57b236c9bcd2a61fcd627b69ae2d7a6eb5bc13f2dc25311348ee08df43bc0c4&

state=927489cb2fcdb32e302713f6a720397868b71dd2128c734181983f367d622c24At step 4, the browser opens Custom URI Scheme and pass CSRF token over to a client app.

access_token request (step 5):https://o2.mail.ru/token?

code_verifier=e61748f28b7d5daf881d571df434ed815a4a65b62c0f90bc77b8a3056f174abc&

code=b57b236c9bcd2a61fcd627b69ae2d7a6eb5bc13f2dc25311348ee08df43bc0c4&

client_id=984a644ec3b56d32b0404777e1eb73390cThe last step brings a response with

access_token.This scheme is generally secure, but there are some special cases when OAuth 2.0 can be simpler and more secure.

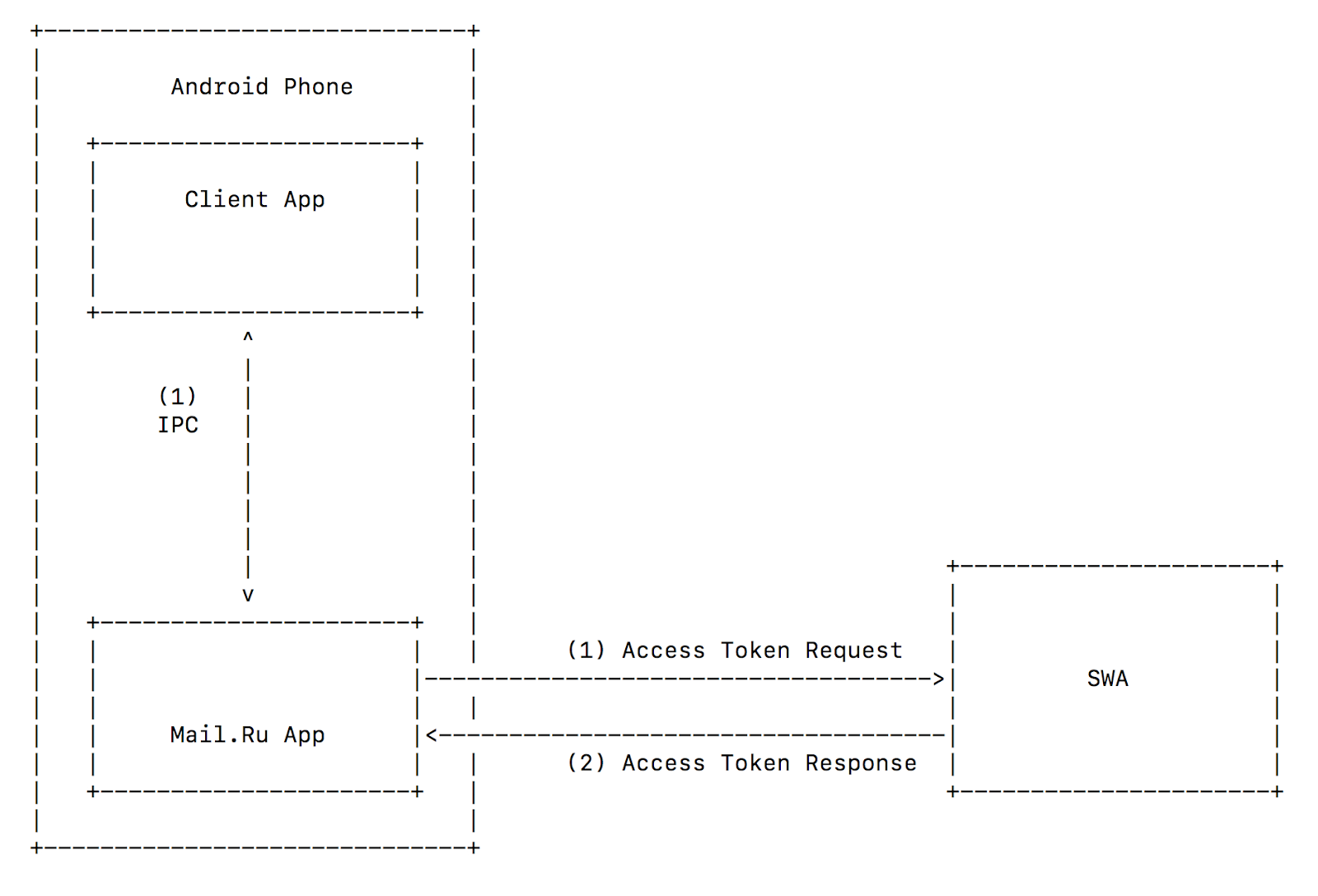

Android IPC

Android has a mechanism of bidirectional data communication between processes: IPC (inter-process communication). IPC is better than Custom URI Scheme for two reasons:

- An app that opens IPC channel can confirm authenticity of an app it’s opening by its certificate. The reverse is also true: the opened app can confirm authenticity of the app that opened it.

- If a sender sends a request via IPC channel, it can receive an answer via the same channel. Together with the cross-check (item 1), it means that no foreign process can intercept

access_token.

Therefore, we can use Implicit Grant to simplify mobile OAuth 2.0 scheme. No

code_challenge and state also means less attack surface. We can also lower the risks of malicious apps acting like legitimate client trying to steal the user accounts. SDK for clients

Besides implementing this secure mobile OAuth 2.0 scheme, a provider should develop SDK for his clients. It’ll simplify OAuth 2.0 implementation on a client side and simultaneously reduce the number of errors and vulnerabilities.

Conclusions

Let me summarise it for you. Here is the (basic) checklist for secure OAuth 2.0 for OAuth 2.0 providers:

- Strong foundation is crucial. In case of mobile OAuth 2.0, the foundation is a scheme or a protocol picked out for implementation. It’s easy to make mistakes whilst implementing your own OAuth 2.0 scheme. Others have already taken knocks and learned their lesson; there’s nothing wrong with learning from their mistakes and make secure implementation in one go. The most secure mobile OAuth 2.0 scheme is described in How to do it securely?

Access_tokenand other sensitive data must be stored in Keychain for iOS and in Internal Storage for Android. These storages were specifically developed just for that. Content Provider can be used in Android, but it must be securely configured.

Client_secretis useless, unless it’s stored in backend. Do not give it away to the public clients.

- Do not use WebView for consent screen; use Browser Custom Tab.

- To defend against code interception attack, use

code_challenge.

- To defend against OAuth 2.0 CSRF, use

state.

- Use HTTPS everywhere, with downgrade forbidden to HTTP. Here is 3-minute demo explaining why (with example from a bug bounty).

- Follow the cryptography standards (choice of algorithm, length of tokens, etc). You may copy the data and figure out why it’s done this way, but do not roll your own crypto.

Codemust be used only once, with a short lifespan.

- From an app client side, check what you open for OAuth 2,0; and from an app provider side, check who opens you for OAuth 2.0.

- Keep in mind common OAuth 2.0 vulnerabilities. Mobile OAuth 2.0 enlarges and completes the original one, therefore,

redirect_uricheck for an exact match and other recommendations for the original OAuth 2,0 are still in force.

- You should provide your clients with SDK. They’ll have fewer bugs and vulnerabilities and it’ll be easier for them to implement your OAuth 2.0.

Further reading

- «Vulnerabilities of mobile OAuth 2.0» https://www.youtube.com/watch?v=vjCF_O6aZIg

- OAuth 2.0 race condition research https://hackerone.com/reports/55140

- Almost everything about OAuth 2.0 in one place https://oauth.net/2/

- Why OAuth API Keys and Secrets Aren't Safe in Mobile Apps https://developer.okta.com/blog/2019/01/22/oauth-api-keys-arent-safe-in-mobile-apps

- [RFC] OAuth 2.0 for Native Apps https://tools.ietf.org/html/rfc8252

- [RFC] Proof Key for Code Exchange by OAuth Public Clients https://tools.ietf.org/html/rfc7636

- [RFC] OAuth 2.0 Threat Model and Security Considerations https://tools.ietf.org/html/rfc6819

- [RFC] OAuth 2.0 Dynamic Client Registration Protocol https://tools.ietf.org/html/rfc7591

- Google OAuth 2.0 for Mobile & Desktop Apps https://developers.google.com/identity/protocols/OAuth2InstalledApp

Credits

Thanks to all who helped me to write this article. Especially to Sergei Belov, Andrei Sumin, Andrey Labunets for the feedback about technical details, to Pavel Kruglov for the English translation and to Daria Yakovleva for the help with release of Russian version of this article.